YRISE EXCHANGES

4REALZA COIN EXCHANGE

STARLINKPROGRAM EXCHANGES

Chris Par

Mar 2021

Overview

HDA, Linux based data analytics cluster, provides web interface. These interfaces can be used to manage and monitor cluster resources and facilities such as Spark. Other applications that you install on your cluster may also provide web interfaces.

PIKACRYPTO EXCHANGES

Brady Chang

May 2021

Connecting Apache Spark to Greenplum

Prerequisites:

Postgresql-42.2.19.jar

Greenplum 6.16.0 (Installed and Online)

spark-3.0.2-bin-hadoop3.2(Installed and Online)

# pyspark_test.py

from pyspark.sql import SQLContext

from pyspark.sql.session import SparkSession

from pyspark.sql.types import *

from pyspark import SparkContext, SparkConf, StorageLevel

import os

if __name__ == "__main__":

spark = SparkSession.builder.appName("gpdb test") \

.config("spark.jars","/opt/greenplum/connectors/postgresql-42.2.19.jar").getOrCreate()

print("You are using Spark " + spark.version);

url = "jdbc:postgresql://localhost/hyperscaleio"

properties = {

"driver": "org.postgresql.Driver",

"user": "gpadmin",

"password": "changeme"

}

url="jbdc:postgresql://localhost/hyperscaleio"

properties= {

"driver": "org.postgresql.Driver",

"user": "gpadmin",

"password": "changeme"

}

url="jdbc:postgresql://mdw:5432/hyperscaleio"

properties = {

"driver":"org.postgresql.Driver",

"user":"gpadmin",

"password": "xxxxxxxx"

}

df = spark.read.jdbc(

url="jdbc:postgresql://localhost:5432/hyperscaleio",

table="(select team_name as tname from nba) as nba_tbl",

properties={"user":"gpadmin", "password":"changeme","driver":"org.postgresql.Driver"}).createTempView('tbl')

spark.sql('select tname from tbl').show(30)

#Running Pyspark_test.py

[root@mdw ~]#pyspark < Pyspark_test.py

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

/opt/spark/python/pyspark/context.py:227: DeprecationWarning: Support for Python 2 and Python 3

prior to version 3.6 is deprecated as of Spark 3.0. See also the plan for dropping Python 2 support

at https://spark.apache.org/news/plan-for-dropping-python-2-support.html

DeprecationWarning)

You are using Spark 3.0.2

--------------------

--------------------

DallasMavericks

LosAngelesClippers

Timberwolves

Philadelphia76ers

DeverNuggets

IndianPacers

MemphisGrizzlies

BostonCeltics

GoldenStateWarriors

Cavaliers

HoustonRockets

PhoenixSuns

NewOrleansPelicans

DetroitPistons

CharlotteHornets

OklahomaCityThunder

LosAngelesLakers

OrlandoMagic

NewYorkKnicks

TorontoRaptors

SanAntonioSpurs

SacrementalKings

MiamiHeat

MilwaukeeBucks

ChicagoBulls

PortlandTrailblazers

UtahJazz

---------------------

SOR EXCHANGES

Allison Chang

May 2021

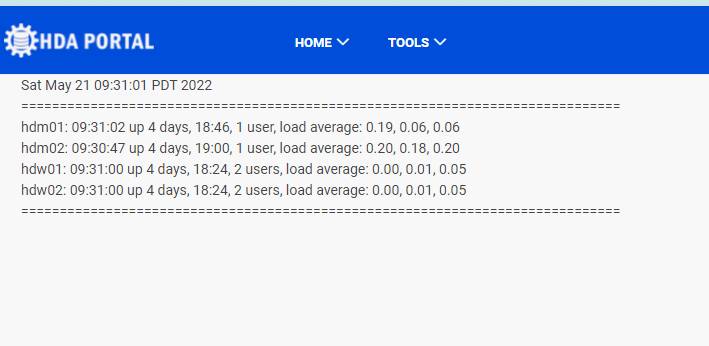

Hyperscale Data Appliance Admin Portal

HDA Portal is used to view either live or recorded statistics covering

Greenplum Database and HDA cluster node status.

ULORD EXCHANGE

Brady Chang

April 2022

Flask-Mail

Sending Email is critical component of business.

HDA has built-in component to send emails.

Here is example:

/opt/flask/shop/cart/app.py

##### Initialze Mail app

from flask import Flask, render_template

from flask_mail import Mail, Message

app.config.update(

MAIL_SERVER ='mail.gandi.net',

MAIL_PORT = 465,

MAIL_USERNAME = 'bradychang@hyperscale.io',

MAIL_PASSWORD = 'xxxxxxxxx',

MAIL_USE_TLS = False,

MAIL_USE_SSL = True,

MAIL_DEFAULT_SENDER = 'bradychang@hyperscale.io')

mail = Mail(app)

.  .  .

@app.route('/contactus',methods=['GET','POST'])

def contactus():

app.logger.info('186: contactus()')

recipients \

= ["brady.chang@gmail.com", "bradychang@hyperscale.io"]

msg = Message('Hello from Hyperscale IO !', recipients)

msg.body = "Hyperscale IO - analytics done right."

app.logger.info('189: before sending message %s' % msg.body)

mail.send(msg)

return "Email sent!"

Open up browser and type in http://hdm01:8888/contactus

You should see the following output on the console and hypermart.log

[2022-04-19 13:40:46,609] INFO in app: 189: before sending message

Hyperscale IO - analytics done right.

send: 'ehlo [172.17.1.11]\r\n'

. . .

reply: retcode (250); Msg: b'mail.gandi.net

send: 'mail FROM: size=356\r\n'

reply: retcode (250); Msg: b'2.1.0 Ok'

send: 'rcpt TO:\r\n'

reply: retcode (250); Msg: b'2.1.5 Ok'

send: 'rcpt TO:\r\n'

reply: b'250 2.1.5 Ok\r\n'

reply: retcode (221); Msg: b'2.0.0 Bye'

COTCOIN EXCHANGES

Allison Chang

May 2022

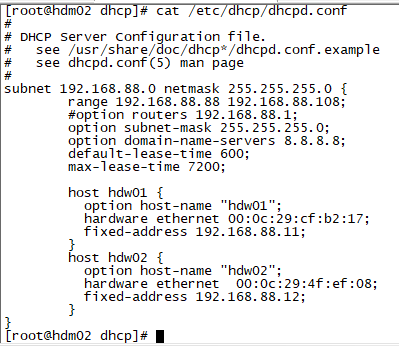

Hyperscale Data Appliance DHCP Configuration

After making /etc/dhcpd/dhcpd.conf resembles the image you see on the left side

Execute the following:

systemctl restart dhcpd

systemctl status dhcpd

dhcpd.service - DHCPv4 Server Daemon

Active: active (running) since ...

Main PID: 22875 (dhcpd)

Status: "Dispatching packets..."

[root@hdm02 ~]# systemctl status dhcpd

dhcpd.service - DHCPv4 Server Daemon

Loaded: loaded (/usr/lib/systemd/system/dhcpd.service; enabled)

Active: active (running)

hdm02 dhcpd[6397]: DHCPREQUEST for 192.168.88.11 ..

hdm02 dhcpd[6397]: DHCPACK on 192.168.88.11 to ...

hdm02 dhcpd[6397]: DHCPREQUEST for 192.168.88.12 ...

hdm02 dhcpd[6397]: DHCPACK on 192.168.88.12 to ...

FASTEST ALERTS EXCHANGES

Brady Chang

May 2022

Integration

Configure Central Logging

Syslog Server Setup

Uncomment the following to enable the syslog server to listen on the UDP and TCP protocol

[root@hdm01 etc]# vi /etc/rsyslog.conf

# Provides UDP syslog reception

$ModLoad imudp

$UDPServerRun 514

# Provides TCP syslog reception

$ModLoad imtcp

$InputTCPServerRun 514

[root@hdm01 etc]# systemctl restart rsyslog

Syslog Client Setup

Append the following line to /etc/rsyslog.conf

*.* @@192.168.88.10:514

[root@hdw01 etc]# systemctl restart rsyslog

Create external table to view log

[gpadmin@hdm01 ext]$ pwd

/opt/greenplum/ext

[root@hdm01 ext]# chmod 664 /var/log/messages

[gpadmin@hdm01 ext]# ln -sf /var/log/messages messages

[gpadmin@hdm01 ~]$ nohup gpfdist -p 8801 -d /opt/greenplum/ext -l /home/gpadmin/gpfdist.log &

Create External Table

# var.log.messages

drop external table if exists var_log_messages;

CREATE READABLE EXTERNAL table var_log_messages

(

msg text

)

location ('gpfdist://hdm01:8801/messages')

format 'TEXT' ;

Test select from External Table

[gpadmin@hdm01 scripts]$ psql -d ecom

hyperscaleio=# select msg from var_log_messages where msg like '%hdm01%'

msg

------------------------------------------------------

May 22 03:40:01 hdm01 rsyslogd: [software="rsyslogd"

May 22 03:44:17 hdm01 ntpd_intres[779]: 0.

May 22 03:44:23 hdm01 ntpd_intres[779]: 1.

May 22 03:44:29 hdm01 ntpd_intres[779]: 2.

May 22 03:44:35 hdm01 ntpd_intres[779]: 3.

May 22 03:50:01 hdm01 systemd: Started Session user root.

May 22 04:00:01 hdm01 systemd: Started Session user root.

VIRALUP EXCHANGES

Allison Chang

May 2022

HDA Portal Logging Configration

In /opt/flask/hda_portal/app.py

import logging

@app.route("/")

def main():

handler = logging.FileHandler("hda_portal.log")

handler.setLevel(logging.DEBUG)

formatter = logging.Formatter("%(asctime)s;\

%(levelname)s;%(message)s")

handler.setFormatter(formatter)

app.logger.addHandler(handler) # Add to built-in logger

app.logger.debug("L18:main debug")

TAKE ME SHOPPING

TAKE ME SHOPPING  GO TO DOWNLOADS

GO TO DOWNLOADS